Choice is seen as a tax on people’s time and attention. We make upward of 35,000 decisions each day, requiring mental energy that often leaves us feeling drained.1 AI-powered personalization promises a solution to decision fatigue that’s custom tailored to our preferences. However, personalization comes with trade-offs: it’s also used to shape, influence and guide our everyday choices and actions. When algorithms make autonomous decisions on our behalf, the visible options we have are hidden from us and we lose personal agency.

The Rise of Personalization

There was a time when companies had limited access to the personal data of potential customers. Even with the advent of the internet, there was still some separation between customers and companies due to limited access to personal computers. However, this changed once smartphones and constant online connectivity allowed for the collection of vast amounts of data through our online activities. The resulting influx of data enabled companies to target ads and drive their business metrics. For instance, if you search for the best running shoes or visit an online shoe store, you may start seeing shoe ads in the sidebar of every website you visit thereafter.

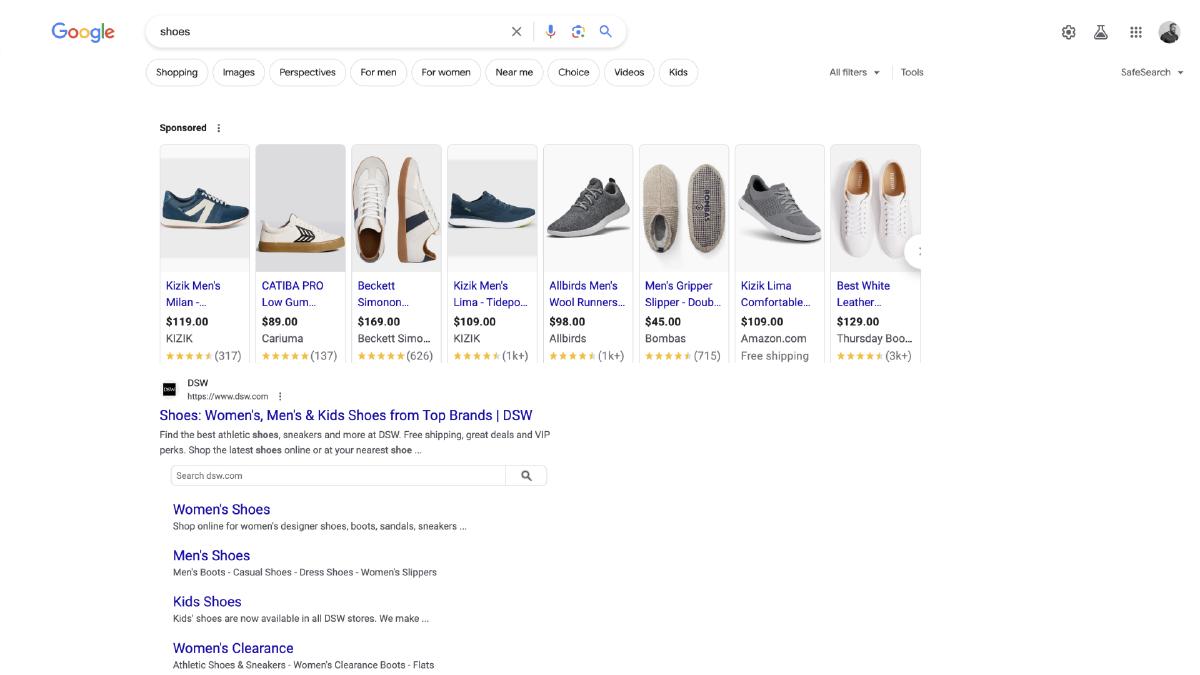

It wasn’t long before the results you’d see when using a search engine like Google was also determined by your demographics, location, interests, and search history. The intention was to serve up the best possible results and content that satisfies searchers’ needs by using personalized information about their online presence. For a time, Google search results were identifiable and visually distinct. This distinction has since eroded and paid results are now harder to distinguish. Regardless, it requires users to read through and spend mental energy to make a choice about which link aligns with their interests.

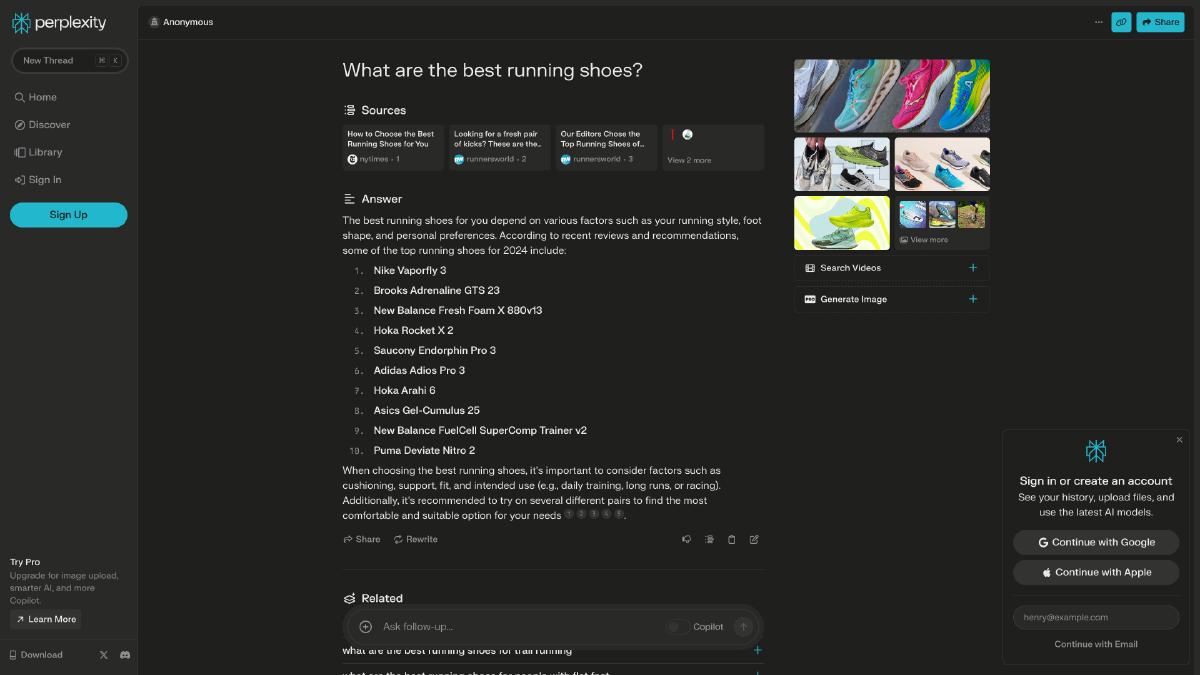

New generative AI capabilities promise to take the work out of searching by summarizing an answer to questions via an AI-powered snapshot of key information to consider, with links to dig deeper. Microsoft now also embeds generative AI via OpenAI’s ChatGPT-4 into it’s search engine, Bing. Up-and-coming challengers promise an ”AI-powered Swiss Army Knife for information discovery and curiosity” that eliminates the burden of choice almost entirely. The underlying premise in these evolutions of search is that people don’t want more choices, they want more confidence in the choices presented.

The reach of personalization within our digital lives now extends far beyond simple search results or ads. It also comes in the form of content tailored to our specific interests, and no platforms do this more effectively than social media. Social platforms employ machine learning algorithms to personalize users' experiences by providing predictive recommendations. They collect data and use the results to enhance their algorithms, creating a feedback loop of random reinforcement. Time spent interacting with content within the platform increases algorithmic quality, resulting in more time spent on the platform, more data collected, and more ads viewed.

Social media platforms aren’t the only ones making use of algorithms to personalize content for their users. According to Nielsen’s June 2023 Streaming Content Consumer Survey, customers spend an average of 10.5 minutes searching for something to watch. To make things worse, 20% say they don’t know what to watch before they start looking and then decide to do something else instead when they can’t find something to watch. To counteract the rise of overwhelming choice, streaming services have started using personalization to recommend content that adapts to each user’s interests and can help expand their interests over time. Their algorithms are fine-tuned enough to individual viewer interests that they can find a percentage match for recommendations based on what you’ve watched in the past, estimating the likelihood that you’ll enjoy a particular film or series. It’s working too — Netflix logged over 92 billion hours watched in just the first half of 2023.

Personalization is now considered an essential business strategy that’s made it’s way into almost every app and service we use. We have even come to expect personalized interactions and get frustrated when this doesn’t happen. We’re willing to sacrifice for the convenience, but at what cost?

The Cost of Personalization

The convenience that personalization promises also comes with trade-offs at the expense of users. The “personalization paradox” refers to the clash between personalized experiences that save us time and concern about the personal data they require. People often want the convenience of personalization without sacrificing privacy, but striking the right balance can be challenging for companies. The vast amounts of personal data that is required for personalization has historically been governed with inconsistent regulation around what can be collected and how it can be used. Tension between the convenience of personalized experiences in contrast to concern for privacy and data security has led to ongoing debates about privacy, user consent, ethical data usage, and the need for regulations in the digital space. Thankfully, personal data that was once freely harvested as a resource and considered company property is now being treated as an asset owned by individuals and entrusted to companies. Consumer mistrust, government action and market competition have contributed to driving change around personal data all around the world.2

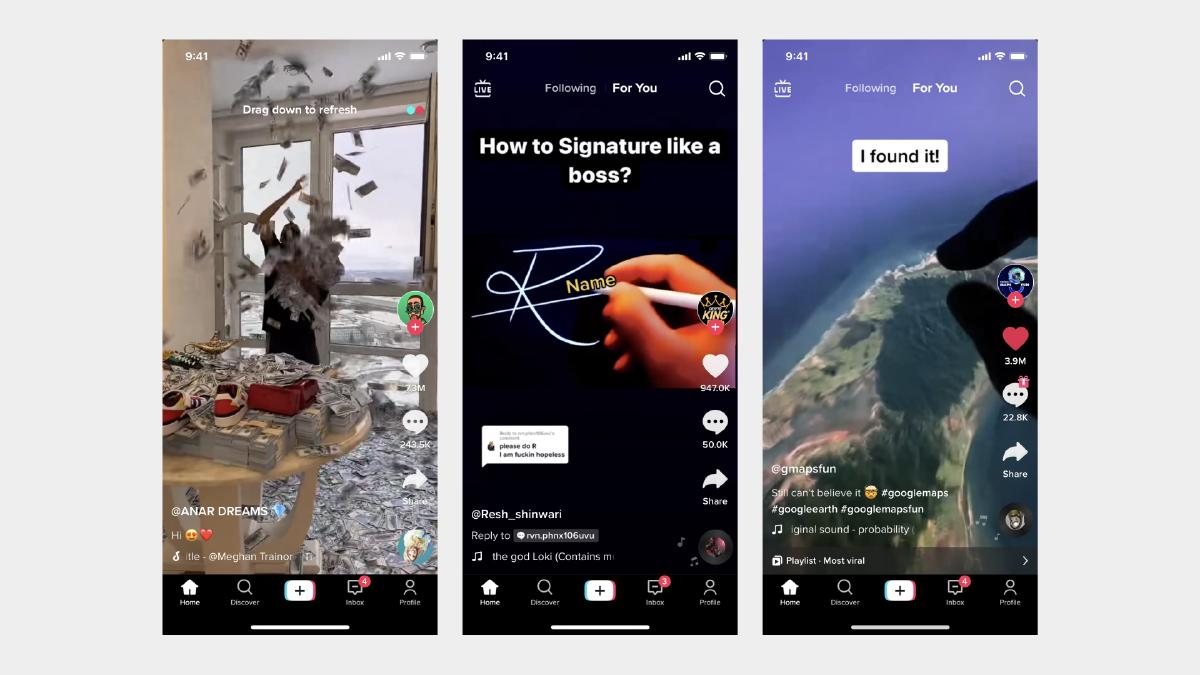

But privacy isn’t the only trade-off we make for the convenience of personalization. Platforms are now utilizing their interfaces to capture data from user interaction in order to personalize content recommendations engineered to keep users on the platform longer. TikTok’s meteoric success has been largely attributed to it’s ability to tap into the reward system of the brain through an endless stream of short videos selected specifically for each user with the net effect of keeping them in a trance-like state on the platform.

Far from enhancing our ability to make the best decisions for ourselves, potent and addictive software can erode our free will by trapping us in loops of instant gratification. In the worst cases, it can feel like companies use our digital profiles as something akin to voodoo dolls, enticing us to buy, click, like, or consume content without ever knowing that they are pulling on our behavioral levers to influence us.

Unfortunately, this degree of personalization can lure users deep into an addictive rabbit hole of increasingly extreme content and expose them to information and opinions that conform to and reinforce their pre-existing beliefs and biases (commonly referred to as a filter bubble).3 Research also suggests that prolonged use of TikTok can lead to a significant decrease in attention span, increased anxiety, depression and stress,4 and difficulty participating in activities that don’t offer instant gratification — a phenomenon known as ‘TikTok brain’. To make matters even worse, these side effects can surface after just 20 minutes of use and exacerbate existing mental health concerns by romanticizing, normalizing or encouraging suicide.5

However, the most alarming trade-off we make for personalization is the erosion of free will and personal autonomy that results from loops of instant gratification. Platforms compete in capturing our attention and exploiting our personal data for their own ends by luring us into infinite feeds optimized for maximum ‘stickiness’. The more time we spend interacting with content on the platform, the higher the algorithmic quality becomes, resulting in content that is more targeted at individual interests. It’s a feedback loop of random reinforcement designed to keep us captive, not provide value or meaningful connection with others.

These platforms have the potential to shape our behavior with subtle and subliminal cues, rewards, and punishments that nudge us toward their most profitable outcomes. As Shoshana Zuboff writes in The Age of Surveillance Capitalism, “Today, surveillance capitalism offers a new template for our future: the machine hive in which our freedom is forfeit to perfect knowledge administered for others’ profit”. Zuboff cautions that this kind of surveillance weakens democracy because, without freedom to act and think independently, we lack the ability to make moral judgments and think critically, which are essential for a democratic society. Democracy is then furthered weakened by the an unprecedented gathering of information and the influence that comes with it.

Empowerment > Personalization

Technology should augment human intellect and enhance our abilities. When platforms prioritize their business goals and keep people captive, our agency is undermined by the convenience of a device within arms reach at any given moment that promises personalized content powered by algorithms people don’t control. Instead, we must ask ourselves: how might we design technology in a way that empowers people? Let’s explore a few alternative possibilities that counteract the trade-offs we make for personalization.

The next phase in user experience will be to change our founding metaphors so that we can express our higher needs, not just our immediate preferences.

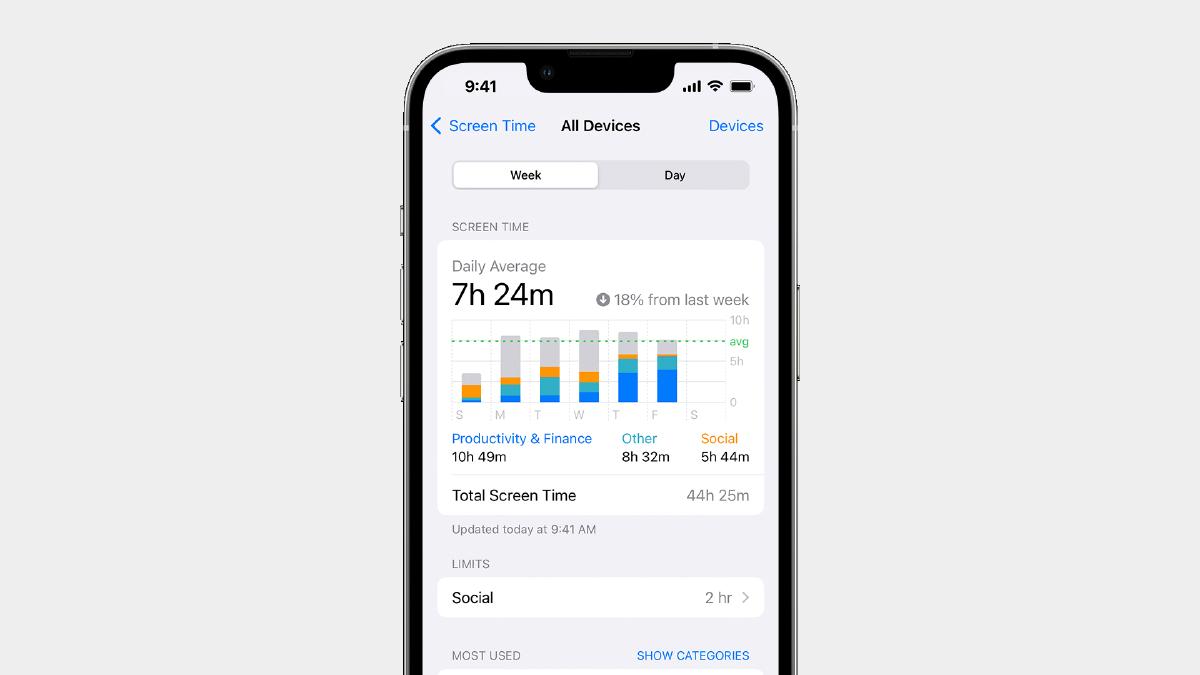

An early step towards empowerment has been simply bringing awareness to people around their digital activities. Device manufacturers have opted to promote usage awareness to encourage healthier digital habits with feature such as Apple’s Screen Time and Google’s Digital Wellbeing initiative. These features can help people better understand their tech use and provides tools to pause distracting apps, set daily limits, customize notifications, etc. But is usage awareness enough?

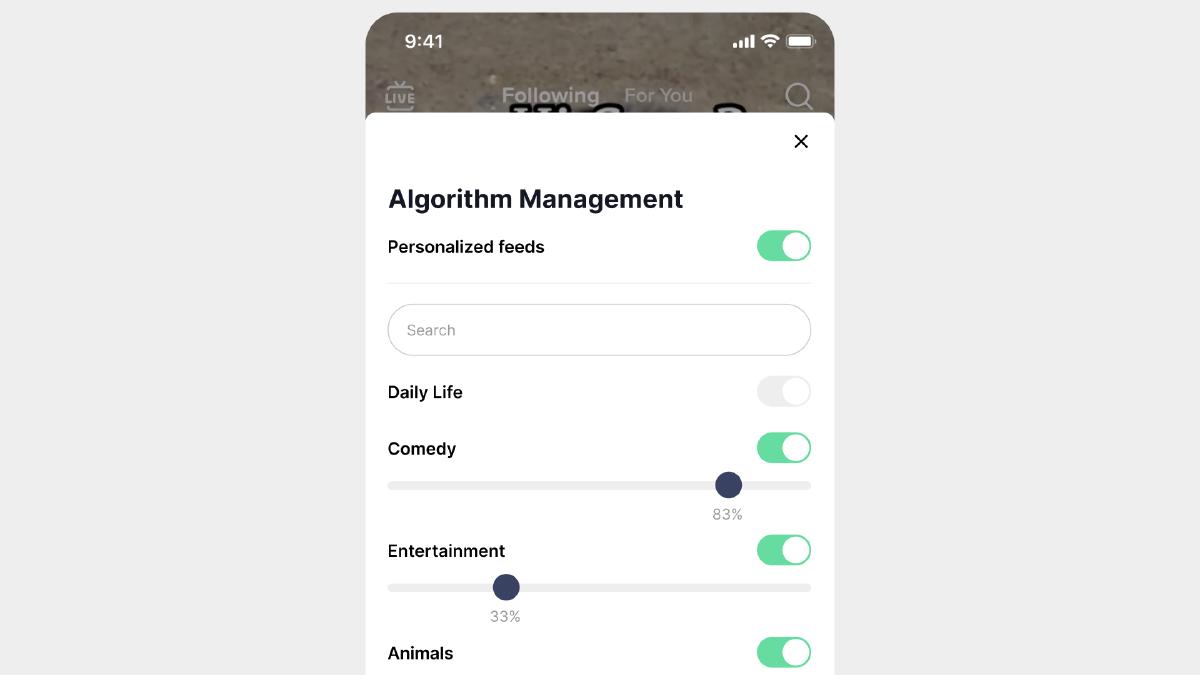

We can also provide the necessary tools and authority to effectively oversee and regulate the algorithms that significantly influence people’s daily experiences. This allows people to actively participate in shaping and customizing their own digital experiences, ensuring a more personalized and tailored online environment that aligns with their preferences, values and goals. People must be empowered to co-create with the algorithms, requiring a balance to be struck between trust and efficiency. We can build a stronger relationship between humans and machines by placing humans in the AI-decision loop and empowering them with the ability to steer the algorithms that shape their experiences. For example, what if platforms enabled users to fine-tune the algorithm that feeds their experience?

Lastly, we can change our relationship with devices altogether. What if technology pushed us more towards low interface and utility over consumption? If we could reduce screen time, we could reduce the likelihood of being seduced by the lure of infinite feeds. One exciting outcome of the increasing capability of AI is the potential to significantly reduce screen time and reliance on apps in many cases. We’re beginning to see personalized operating systems that provide a natural language interface and an emphasis on privacy and data control. These systems are early and have a way to go before they can replace smartphones, but it’s a promising glimpse at a future where we might not have to rely on screens.

Computers have become integral to every aspect of our lives. They have become small enough to wear or carry in our pockets and adorn every possible surface imaginable. They ensure we have content tailored to our interests within reach at any given moment thanks to machine learning algorithms that monitor and adapt to every interaction. However, they have also ushered in an era where time and attention are increasingly scarce resources. In addition to enhancing our abilities, technology has become a vehicle for extracting our attention, monetizing our personal information, and exploiting our psychological vulnerabilities. While personalization offers convenience and tailored experiences, it is crucial to consider the tradeoffs. Privacy isn’t the only trade-off we make for personalization: it’s also used to shape, influence and guide our everyday choices and actions. We must design technology for empowerment and create technology that respects privacy, fosters well-being, and aligns with users' higher needs and values.

-

Marples, M. (2022). Decision fatigue drains you of your energy to make thoughtful choices. Here’s how to get it back. CNN Health. https://www.cnn.com/2022/04/21/health/decision-fatigue-solutions-wellness/ ↩︎

-

Rahnama, H., & Pentland, A. (2022).The New Rules of Data Privacy. Harvard Business Review. https://hbr.org/2022/02/the-new-rules-of-data-privacy ↩︎

-

Figà Talamanca, G., & Arfini, S. (2022). Through the Newsfeed Glass: Rethinking Filter Bubbles and Echo Chambers. Philosophy & technology, 35(1), 20. https://doi.org/10.1007/s13347-021-00494-z ↩︎

-

Sha, P., & Dong, X. (2021). Research on Adolescents Regarding the Indirect Effect of Depression, Anxiety, and Stress between TikTok Use Disorder and Memory Loss. International journal of environmental research and public health, 18(16), 8820. https://doi.org/10.3390/ijerph18168820 ↩︎

-

(2023). Driven Into the Darkness: How TikTok’s ‘For You’ Feed Encourages Self-Harm and Suicidal Ideation. Amnesty International. https://www.amnesty.org/en/documents/POL40/7350/2023/en/ ↩︎

Next Article

Design with Intentionality

It’s time we expand what it means to create more meaningful relationships between people and technology by considering the intentionality of the products we build.